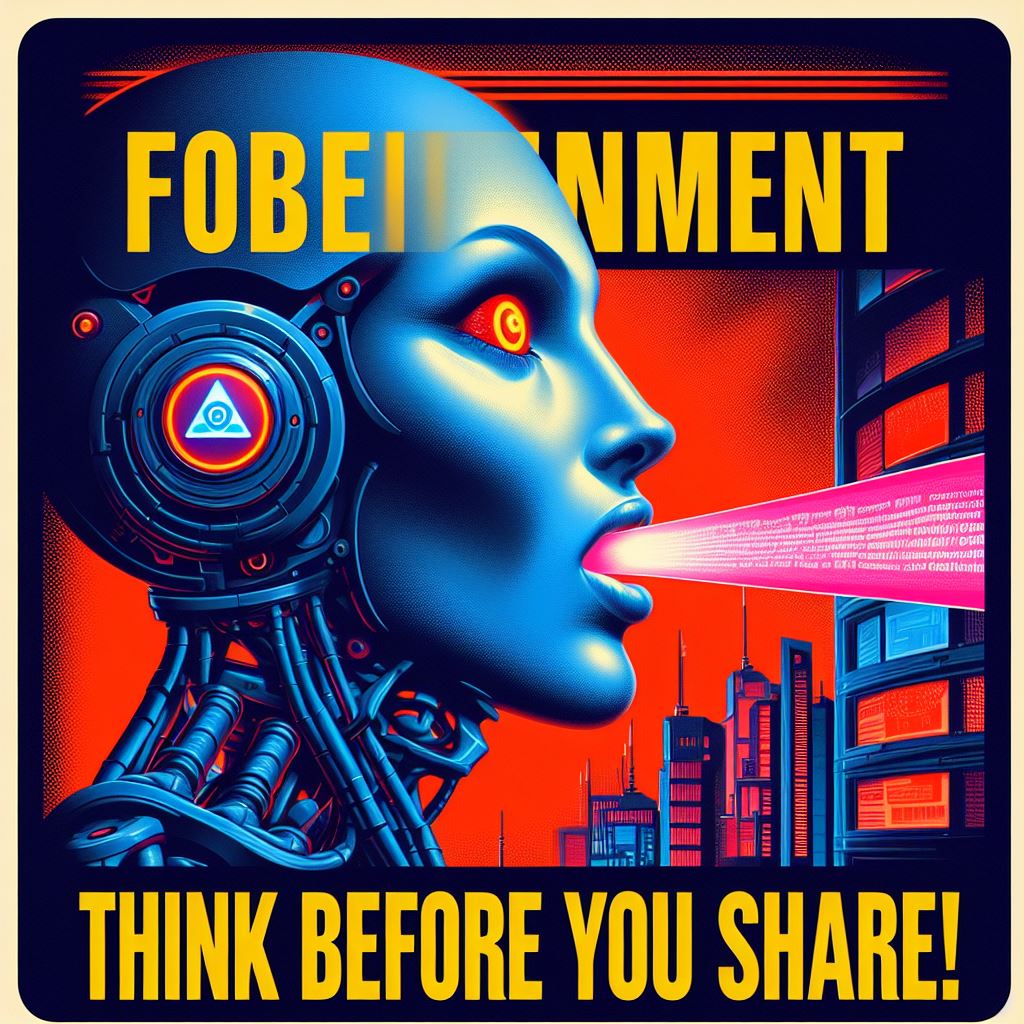

In an era where technological advancements continue to reshape various aspects of society, concerns surrounding the authenticity and manipulation of digital content have become increasingly prevalent. The latest development in this realm comes from government’s warning on AI-generated content. As artificial intelligence technologies evolve rapidly, governments around the world are becoming increasingly vigilant about the potential implications of AI-generated content on misinformation, public trust, and national security.

AI-generated content refers to media, texts, images, videos, or audio files created or manipulated using artificial intelligence algorithms. These algorithms can generate highly realistic content, making it challenging for viewers to discern between authentic and AI-generated material. While AI has demonstrated numerous beneficial applications across industries, its potential misuse for spreading misinformation or manipulating public opinion has raised significant concerns among policymakers.

The government’s warning on AI-generated content underscores the pressing need for comprehensive regulation and monitoring frameworks to mitigate the risks associated with its proliferation. Without adequate safeguards in place, there is a heightened risk of AI-generated content being used to deceive individuals, manipulate public discourse, and undermine democratic processes. Therefore, governments are increasingly recognizing the urgency of addressing this issue to safeguard societal integrity and stability.

One of the primary concerns highlighted by government authorities is the potential for AI-generated content to fuel misinformation campaigns and sow discord within communities. With the ability to create convincing fake news articles, social media posts, or videos, malicious actors could exploit AI technology to disseminate false information at an unprecedented scale. Such misinformation campaigns have the potential to influence public opinion, incite social unrest, and even undermine the legitimacy of democratic institutions.

Moreover, the widespread dissemination of AI-generated content poses significant challenges for traditional methods of content verification and fact-checking. While human moderators and fact-checkers play a crucial role in identifying and flagging misinformation, the sheer volume and sophistication of AI-generated content can overwhelm manual verification processes. As a result, there is a growing need for innovative technological solutions and automated tools to detect and combat AI-generated misinformation effectively.

Beyond the realm of misinformation, AI-generated content also raises concerns about its potential impact on national security and geopolitical stability. Sophisticated AI algorithms can manipulate audio and video recordings to create convincing deepfake content, depicting individuals saying or doing things they never actually did. In the context of international relations, such deepfake videos could exacerbate diplomatic tensions, trigger conflicts, or undermine trust between nations. Therefore, governments must collaborate on a global scale to develop strategies for detecting and mitigating the risks posed by AI-generated deepfakes.

Furthermore, the proliferation of AI-generated content has significant implications for intellectual property rights and creative industries. As AI algorithms become increasingly proficient at generating original artworks, music compositions, or written texts, questions arise regarding the ownership and copyright of such creations. Clarifying legal frameworks and establishing guidelines for attributing authorship and ownership of AI-generated content are essential to protect the rights of creators and foster innovation in the digital age.

In response to these challenges, governments are taking proactive measures to address the risks associated with AI-generated content. This includes investing in research and development initiatives to advance technologies for detecting and combating AI-generated misinformation, fostering collaboration between tech companies, academic institutions, and civil society organizations, and enacting legislative measures to regulate the creation and dissemination of AI-generated content.

Additionally, government agencies are working closely with social media platforms and technology companies to develop tools and protocols for identifying and removing AI-generated misinformation from online platforms. By leveraging a combination of artificial intelligence, machine learning, and human expertise, these initiatives aim to enhance the resilience of online ecosystems against the proliferation of AI-generated content.

However, addressing the multifaceted challenges posed by AI-generated content requires a concerted effort from governments, tech companies, civil society, and other stakeholders. It necessitates a holistic approach that combines regulatory interventions, technological innovations, and public awareness campaigns to mitigate the risks and promote the responsible use of AI technologies.

The government’s warning on AI-generated content highlights the urgent need for collective action to address the challenges posed by the proliferation of AI-generated misinformation, deepfakes, and other deceptive content. By fostering collaboration and innovation, governments can mitigate the risks associated with AI-generated content and uphold the integrity of information ecosystems in the digital age.

I just could not depart your web site prior to suggesting that I really loved the usual info an individual supply in your visitors Is gonna be back regularly to check up on new posts

of course like your website but you have to check the spelling on several of your posts A number of them are rife with spelling issues and I in finding it very troublesome to inform the reality on the other hand I will certainly come back again

Does your website have a contact page? I’m having problems locating it but, I’d like to shoot you an e-mail. I’ve got some recommendations for your blog you might be interested in hearing. Either way, great website and I look forward to seeing it expand over time.